Researchers at North Carolina State University have developed a new prefetching technique for processors that could boost performance by up to 40-percent any data not stored in a CPU’s cache must be pulled from RAM, but as more cores are added they can create a bottleneck by competing for memory access.The main idea behind the design of multi core computer chips is that they allow the machines to operate at amazingly great speeds and the core is required to fetch some information that’s not available in its memory………..

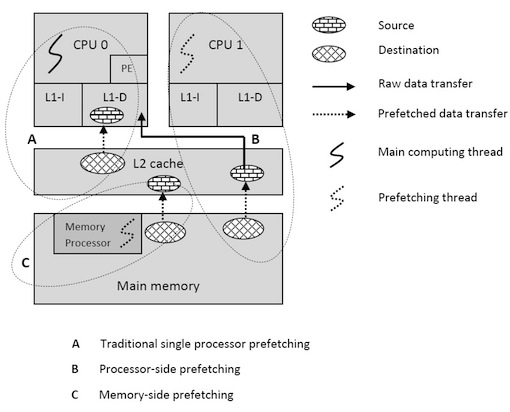

Researchers from North Carolina State University have developed two new techniques to help maximize the performance of multi-core computer chips by allowing them to retrieve data more efficiently, which boosts chip performance by 10 to 40 percent. To do this, the new techniques allow multi-core chips to deal with two things more efficiently: allocating bandwidth and “prefetching” data. Multi-core chips are supposed to make our computers run faster. Each core on a chip is its own central processing unit, or computer brain. However, there are things that can slow these cores. For example, each core needs to retrieve data from memory that is not stored on its chip. There is a limited pathway or bandwidth these cores can use to retrieve that off-chip data. As chips have incorporated more and more cores, the bandwidth has become increasingly congested – slowing down system performance. One of the ways to expedite core performance is called prefetching. Each chip has its own small memory component, called a cache. In prefetching, the cache predicts what data a core will need in the future and retrieves that data from off-chip memory before the core needs it. Ideally, this improves the core’s performance. But, if the cache’s prediction is inaccurate, it unnecessarily clogs the bandwidth while retrieving the wrong data. This actually slows the chip’s overall performance.

“The first technique relies on criteria we developed to determine how much bandwidth should be allotted to each core on a chip,” says Dr. Yan Solihin, associate professor of electrical and computer engineering at NC State and co-author of a paper describing the research. Some cores require more off-chip data than others. The researchers use easily-collected data from the hardware counters on each chip to determine which cores need more bandwidth. “By better distributing the bandwidth to the appropriate cores, the criteria are able to maximize system performance,” Solihin says. “The second technique relies on a set of criteria we developed for determining when prefetching will boost performance and should be utilized,” Solihin says, “as well as when prefetching would slow things down and should be avoided.” These criteria also use data from each chip’s hardware counters. The prefetching criteria would allow manufacturers to make multi-core chips that operate more efficiently, because each of the individual cores would automatically turn prefetching on or off as needed. Utilizing both sets of criteria, the researchers were able to boost multi-core chip performance by 40 percent, compared to multi-core chips that do not prefetch data, and by 10 percent over multi-core chips that always prefetch data.

[ttjad keyword=”processor”]