The new line of graphics cards from Nvidia, the Nvidia GeForce GTX 480, is based on the grandly named Fermi architecture and packs some very impressive hardware under the hood. The card has 1.5GB of fast DDR5 RAM, an exotic heat-pipe based cooling system and an impressive three billion transistors on a card just 10.5 inches long.

Fermi Graphics Architecture in a Nutshell

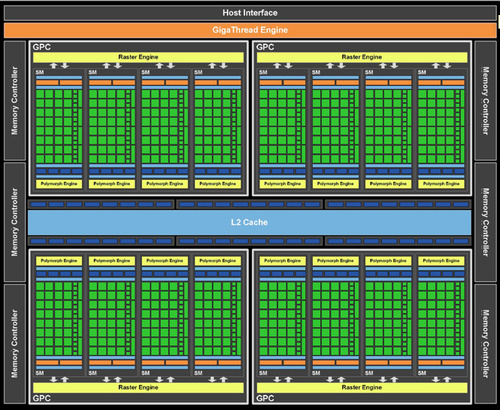

Nvidia designed the GTX 480 architecture from the ground up as a new architecture, with the goal of combining best-of-class graphics performance with a robust compute component for GPU compute applications. The architecture is modular, consisting of groups of ALUs (“CUDA cores”) assembled into blocks of 32, along with texture cache, memory, a large register file, scheduler and what Nvidia calls the PolyMorph Engine. Each of these blocks is known as an SM, or streaming multiprocessor.

The warp scheduler ensures that threads are assigned to the compute cores. The PolyMorph engine takes care of vertex fetch, contains the tessellation engine and handles viewport transformation and stream output.

Each CUDA core inside the SMs are scalar, and are built with a pipelined, integer ALU and a floating point unit (FPU) which is fully IEEE 754-2008 compliant. The FPU can handle both single- and double-precision floating point operations. Each SM has a 64KB memory pool. When used for graphics, the 64KB is split into 16KB of L1 cache and 48KB of shared memory. The SMs belong to blocks of four graphics processing clusters (GPCs) connected to the raster output engines. The GPCs share 768MB of L2 cache. Six memory controllers manage access the GDDR5 memory pool.

It’s About Geometry Performance

Prior generations of GPUs built on DirectX 10 and earlier radically improved texturing and filtering performance over time. Better image quality came through effects like normal mapping (bump mapping) to create the illusion of greater detail with flat textures.

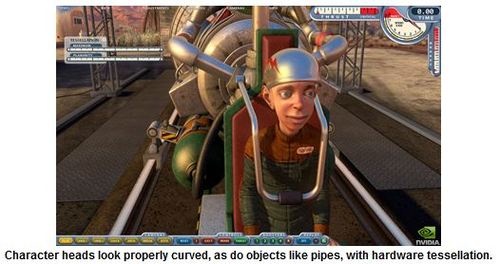

DirectX 11 supports hardware tessellation. Hardware tessellation works with a base set of geometry with predefined patches. The DX11 tessellation engine takes that patch data and procedurally generates triangles, increasing the geometric complexity of the final object. This means that heads become rounder, gun barrels aren’t octagons and other geometric details appear more realistic.

The hardware tessellator that’s built into the PolyMorph Engine is fully compliant with DirectX 11 hardware tessellation. Given that both major GPU suppliers are now shipping DirectX 11 capable parts, we may finally see the end of blocky, angular heads on characters with hardware tessellation.

Image Quality Enhancements

The 480 GTX increases the numbers of texture and ROP units, as well as scaling up raw computational horsepower in the SMs. This allows the card to take effects like full scene anti-aliasing to the next step. Nvidia suggests that 8x anti-aliasing is possible in most games with only a slight performance penalty over 4x AA. The new GPU will also enable further AA capabilities, such as 32x CSAA (coverage sample anti-aliasing) and improved AA with transparent objects.

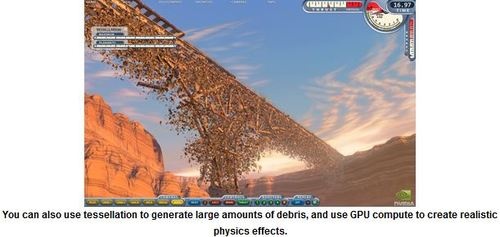

As with prior Nvidia GPUs, the company is talking up performance in GPU compute. This translates directly into more robust image quality effects, including physics and post-processing effects such as better water effects, improved depth-of-field and specialized effects like photographic background bokeh.

Read here for a deeper dive into Fermi graphics architecture.

The GeForce 480 GTX

When Nvidia rolled out the GF100 graphics architecture in January, they talked about a chip with 512 CUDA cores. As it turns out, the 480 GTX is shipping with only 480 cores enabled – one full SM is disabled. It’s uncertain whether this is because of yield problems. Even using a 40nm process, the GTX 480 chip is massive. Alternatively, Nvidia may have disabled an SM because of power issues – the GTX 480 already consumes 250W at full load, making it one of the most power hungry graphics cards ever made.

Note that the “480” in GTX 480 doesn’t refer to the 480 CUDA cores. Nvidia is also launching the GeForce GTX 470, which ships with 448 active computational cores. See the chart for the speeds and feeds, alongside the current single GPU Radeon HD 5870.

What’s notable, beyond the sheer number of transistors, is the number of texture units and ROPs – both exceed what’s available in the Radeon HD 5870. It’s also worth noting the maximum thermal design power. It’s rated at 250W, or 62W more than the Radeon HD 5870. In practice, we found the differences to be higher (see the benchmarking analysis for power consumption numbers.)

Power and Connectivity

Since the new cards are so power-hungry, Nvidia’s engineers designed a sophisticated, heat-pipe based cooler to keep the GPU and memory within the maximum rated 105 degrees C operating temperature. When running full bore, the cooling fan spins up and gets pretty loud, but it’s no worse than AMD’s dual GPU Radeon HD 5970. It is noticeably louder than the single chip Radeon HD 5870, however.

The cooling system design helped Nvidia build a board that’s just 10.5 inches long, a tad shorter than the Radeon HD 5870 and much shorter than the foot-long Radeon HD 5970. Given the thermal output, however, buyers will want to ensure their cases offer robust airflow. Nvidia suggests a minimum 550W PSU for the GTX 470 and a 600W rated power supply for the 480 GTX. The 480 GTX we tested used a pair of PCI Express power connectors – one 8-pin and one 6-pin.

Unlike AMD, Nvidia is sticking with a maximum of two displays with a single card. All the cards currently shipping will offer two dual-link DVI ports and one mini-HDMI connector. Any two connectors can be used in dual panel operation. Current cards do not offer a DisplayPort connector.

Nvidia is also beefing up its 3D Vision stereoscopic technology. Wide screen LCD monitors are now available with 120Hz refresh support in full 1920×1080 (1080p) resolution. One card will drive a single 1080p panel. If your wallet is healthy enough to afford a pair of GTX 400 series cards, 3D Vision is being updated so that you can have up to three displays running in full stereoscopic mode.

What’s the price of all this technological goodness? Nvidia is targeting a $499 price point for the 480 GTX and $349 for the 470 GTX. Actual prices will vary, depending on supply and overall demand.

The burning question, of course, is: when can you get one? Rumors have been flying around about yields and manufacturing issues with the Fermi chip. Nvidia’s Drew Henry stated categorically that “tens of thousands” would be available on launch day. We’ll just have to wait to see what that means for long term pricing and availability.

It’s possible we’re seeing the end of the era brute force approaches to building GPUs. The 480 GTX pushes the edge of the envelope in both performance and power consumption – and that’s with 32 compute units disabled. So even at 250 watts or more, we’re not seeing the full potential of the chip.

In the end, the 480 GTX offers superlative single GPU performance at a suggested price point that seems about right. It does lack AMD’s Eyefinity capability and its hunger for watts is unparalleled. Is the increased performance enough to bring gamers back to the Nvidia fold? If efficiency matters, gamers may be reluctant to adopt such a power-hungry GPU. The performance of the Radeon HD 5870 is certainly still in the “good enough” category, and that card is $100 cheaper and consumes substantially less power. If raw performance is what counts, the 480 GTX will win converts. Only the fickleness of time, availability and user desires will show us which approach wins out over the long haul.

GTX 480: Best Single GPU Performance

Our test system consisted of a Core i7 975 at 3.3GHz, with 6GB of DDR3 memory running at 1333MHz, running on an Asus P6X58D Premium motherboard. Storage included a Seagate 7200.12 1TB drive and an LG Blu-ray ROM drive. The power supply is a Corsair TX850w 850W unit.

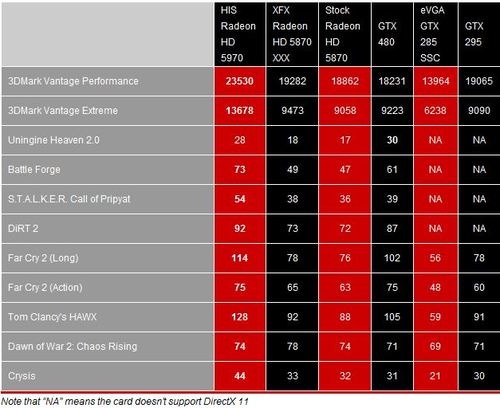

We’re adding in performance of 3DMark Vantage as a matter of interest; FutureMark’s 3D performance test is increasingly antiquated, and not really a useful predictor of gaming performance.

We tested six different graphics cards, including a standard Radeon HD 5870 and the factory overclocked Radeon HD 5870 XXX edition. We also included results from older Nvidia cards, including the aggressively overclocked eVGA 285 GTX SSC and a reference 295 GTX. Also included was an HIS Radeon HD 5970, built with two Radeon HD 5870 GPUs.

For the most part, the Radeon HD 5970 won most of the benchmarks. One interesting point is the recently released Unigine 2.0 DX11 test. If you scale up tessellation to “extreme” the GTX 480 edges out the dual GPU AMD solution.

1920×1200, 4xAA

Let’s check out performance first at 1920×1200, with 4xAA.

The GTX 480 wins about half the benchmarks against the single GPU Radeon HD 5870, and essentially ties in the rest. Where it does win, however, it generally wins big.

The GTX 480 “wins” in another test – power consumption – but not in a good way. The system idled at 165W with the GTX 480, exceeded only by the dual GPU HD 5970’s 169W. However, at full load, the 480 GTX gulped down 399W – 35W more than the 5970 and fully 130W more than the Radeon HD 5870 at standard speeds.

Up next, how do the cards perform under more challenging conditions?

1920×1200, 8xAA

Now let’s pump up the AA to 8x and see what happens. Note that our Dawn of War 2: Chaos Rising and Call of Pripyat benchmarks drops off the list, since we used the in-game AA setting for Chaos Rising, which only has a single, unlabeled setting for AA. The STALKER test doesn’t support 8xAA.

Note that “NA” means the card doesn’t support DirectX 11

What’s immediately evident is that the GTX 480’s performance drops less dramatically with 8x AA enabled than with the other cards. The dual GPU Radeon HD 5970 still wins most of the tests, but the GTX 480 is the clear winner in all the benchmarks except for the older Crysis test – and that’s a dead heat.

So Nvidia’s assertion that the card can run in 8xAA mode with only a small performance penalty looks accurate. With Nvidia’s cards, though, it may be more interesting to run CSAA with transparency AA enabled, assuming the game supports it.

In the end, the 480 GTX, given the current state of drivers, is priced about right – assuming you can actually buy one for $499. It’s about $100 cheaper than the Radeon HD 5970, and users won’t have to worry about dual-GPU issues. At $100 more than a Radeon HD 5870, it’s the fastest single GPU card we’ve seen. If raw performance is what you want, then the 480 GTX delivers, particularly at high AA and detail settings.

The real kicker, however, is power consumption. Performance counts, but efficiency is important, too. The GeForce 480 GTX kicks out high frame rates, but the cost in terms of watts per FPS may be too high for some.

The Role of Drivers

Drivers – those magical software packages that actually enable our cool GPU toys to work – are a critical part of the performance equation. It’s no surprise that AMD released versions of its Catalyst 10.3 drivers, which offered big performance improvements in a number of popular games. AMD has had a good nine months to tune and tweak their DirectX 11 drivers. The timing of this driver release is also no surprise, as both AMD and Nvidia have, in the past, released performance enhanced drivers just as their competitor was about to ship a new card.

The 480 GTX was surprisingly weak on some game tests, while performance on other tests was nothing short of excellent. It’s very likely that performance will increase over time, although some games which have a stronger CPU element, such as real-time strategy games, may not see massive performance gains.

Both Nvidia and AMD have extensive engineering staff dedicated to writing and debugging drivers. AMD has committed to a monthly driver release, but is willing to release hotfix drivers to improve performance on new, popular game releases. Nvidia’s schedule is somewhat irregular, but the company has stepped up the frequency of its driver releases in the past year, as DirectX 11 and Windows 7 have become major forces.

Developing drivers for a brand new architecture is a tricky process, and engineers never exploit the full potential of a new GPU at launch. As we’ve seen with Catalyst 10.3, sometimes a new driver can make an existing card seem new all over again.

Average versus Minimum Frame Rate

A few years ago, Intel commissioned a study to find out what the threshold of pain was when it game to playing games. At what point will lower frame rates affect player experiences? Their research uncovered two interesting data points. First, if a game could maintain a frame rate above 45 fps, then users would tend to remain immersed in the gaming experience. The other factor, however, are wide, sudden variations in frame rate.

If you’re humming along at 100fps, and the game suddenly drops to 48 fps, you notice, even though you’re still above that magical 45 fps threshold.

Modern game designers spend a ton of time tweaking every scene to avoid those sudden frame rate judders. Given the nature of PC games, with its wide array of processors and GPUs, gamers will still experience the jarring effects of low frame rates or sudden drops in performance. The goal is to keep those adverse events to a minimum.

One older benchmark we no longer run, but is worth checking out for these effects, is the RTS World in Conflict. The built-in benchmark also has a real time bar that changes on the fly as the test is run. Watching the bar drop into the red (very low frame rates) during massive explosions and debris flying was always illuminating.

That’s why we’re generally happy to see very high average frame rates. A game that will run at 100 fps will more likely stay above that 45 fps barrier, though you may see certain scenes drop.

Direct X 11 Gaming

PC game developers seem to be taking up DirectX 11 more quickly than past versions of DirectX. There are some solid reasons for that. Even if you don’t have a DX11 card, installing DX11 will improve performance, since the libraries themselves are now multithreaded.

Here’s a few good games that have been recently released with DirectX 11 support:

• Metro 2033. This Russian-made first person shooter is one of the more creepily atmospheric titles we’ve fired up recently. The graphics are richly detailed, and the lighting effects eerie and effective.

• DiRT 2. Quite a few buyers of AMD cards received a coupon for DiRT 2 when the HD 5800 series shipped. The game offers colorful and detailed graphics and good racing challenges, although the big deal made about the water effects was overblown – the water doesn’t look all that good, maybe because the spray looks unrealistic.

• Battlefield: Bad Company 2. While it’s had a few multiplayer teething problems, BC2 has consumed vast numbers of hours of online time, plus has a surprisingly good single player story.

• S.T.A.L.K.E.R.: Call of Pripyat. This is the actual sequel to GSC’s original STALKER title. It seems like a substantial improvement over the Clear Sky prequel. The tessellation certainly helps immersion as you fight alongside or against other stalkers.

• Aliens vs Predator. The new release of the venerable title from Rebellion and SEGA also make use of hardware tessellation, making the Aliens look even more frightening and all too realistic.

Source: Gizmodo.