Now Web page is the second language in the world. We use it for our personal to official job . But where is going our security in case web page?

The organization behind Firefox, the world’s second most popular Web browser, has embarked on an ambitious project to change this. Instead of forcing people concerned about privacy to scroll through pages of “notwithstanding anything to the contrary,” the Mozilla Foundation is designing a standard set of colored icons to reveal how data-protective–or how intrusive–Web sites are.

It does seem a bit odd that, in the era of the iPad and cars that nearly drive themselves, technologists have been unable to puzzle out a better way to display that privacy information. The Mozilla Foundation’s tentative solution is to employ the leverage it has through Firefox, used by something like 350 million people worldwide, to convince publishers to disclose their privacy practices in a standard way that would be displayed in a Web browser’s address bar.

“The most important thing we can be doing now is to create the information architecture which defines what people should care about privacy,” said Aza Raskin, head of user experience for Mozilla Labs. A list of eight categories used for brainstorming includes whether the Web site shares information with third parties, whether data are retained after use, whether data are encrypted, and whether collected data are personally identifiable.

A preliminary suggestion that has been submitted to the Mozilla Foundation as a set of privacy icons for Firefox.

(Credit: Mozilla.org)

The Mozilla Foundation’s eventual goal is to create icons as easy to understand as care labels on a shirt that say whether it should be dry cleaned or washed in cold water. Using the letter P inside a circle has been discussed, even if it bears an unfortunate resemblance to the ubiquitous blue signs for parking lots, as has borrowing icon ideas from Creative Commons. (The project is unrelated to the ad industry’s recent announcement of a blue “i” icon for behavioral advertising.)

At a meeting last week in Mozilla’s headquarters in Mountain View, Calif., a few dozen attendees including representatives from the Federal Trade Commission began to sketch out how a standard for privacy icons would work. “They were thinking that you might have several icons in the address bar for each site,” said Seth Schoen, staff technologist at the Electronic Frontier Foundation. “Maybe they would be showing things that were good about that site’s privacy practices, and maybe they would be showing things that were bad about that site’s privacy practices.”

Mozilla Labs’ Raskin has been forthright about using privacy icons in the Web browser as a tool to reward and punish. Raskin wrote last month that: “If Firefox encounters a privacy policy that doesn’t have Privacy Icons, we’ll automatically display the icons with the poorest guarantees: your data may be sold to third parties, your data may be stored indefinitely, and your data may be turned over to law enforcement without a warrant, etc.”

Didn’t P3P do this already?

The challenge for the organization will be avoiding the problems that plagued P3P, or Platform for Privacy Preferences, an earlier effort to convince publishers to rate their own sites in a standard manner. Almost from the moment of its launch more than a decade ago, P3P began a long slide into irrelevance, and today major sites like Google.com, Apple.com, CNN.com, and Twitter.com do not use P3P to summarize their privacy policies.

At the time of its creation, though, P3P enjoyed the enthusiastic support of the World Wide Web Consortium and Internet icons like Tim Berners-Lee, who predicted that the technology will become the “keystone to resolving larger issues of both privacy and security on the Web.” In an echo of what’s being planned for Firefox today, Microsoft said in 2001 that Internet Explorer 6 would require ad networks to adopt P3P if they wanted their Web technology to continue to work with the new browser.

One explanation for why P3P died is that it was too complicated; the final specification tops out at a novel-length weight of 49,000 words, while the complete text of Lewis Carroll’s Alice in Wonderland is only 29,000.

Or perhaps there was little actual demand on the part of Internet users, who have been known to divulge their account passwords for a chocolate bar. P3P’s backers did include tech firms that hoped it would head off burdensome government regulation; when the early threat faded, so did P3P’s support. (Lending credence to that theory are the vicious attacks on P3P by privacy activists clamoring for new laws, who dubbed it “pretty poor privacy” at the time.)

Few noticed when a P3P working group officially abandoned the idea in 2007, admitting in a note that “there was insufficient support from current browser implementers.” An August 2009 article quoted Rigo Wenning, the editor of the final P3P draft, as saying: “We did not manage to convince the browsers, that is the big failure.”

How three privacy categories grew to 17

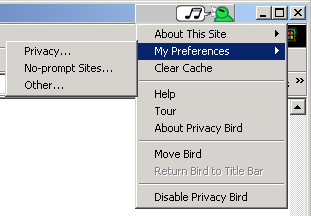

Lorrie Cranor, a member of the P3P working group who has done extensive work on privacy statements as a faculty member at Carnegie Mellon University, says that the challenge of distilling complex and customized privacy policies into a few icons could be insurmountable. Cranor should know: At AT&T, she once created an Internet Explorer plug-in called Privacy Bird–it looks like the offspring of a duck and a parrot–that turns green when a Web site is privacy-protective and red when it is not.

“No matter where you draw the line about what’s in and what’s out, there are companies that just miss, and they argue that their practices aren’t really so bad they should be labeled as ‘bad,'” Cranor said. “And they have some unique business model that requires using data in some way that makes perfect sense to them–and consumers would understand it too if only we created a special category for them to use to explain it.”

Then, Cranor says, the next thing you know is that the original two or three categories have ballooned to 15 or 17 categories to account for all of those situations. “And then the categories are so fine grained and confusing that companies misclassify themselves and users can’t distinguish them anyway.” (If firms agree to a few simple categories, Cranor adds, the Mozilla plan would be “feasible.”)

The privacy icon project is part of a broader effort to ensure that Web users can control their own online experience, and is still “embryonic,” says Mark Surman, executive director of the Mozilla Foundation. The plan is to “bring lawyers and product people together, but also as this unfolds it will be designers, user testing,” Surman said. “Our bigger interest is that users take control of their online lives.”

Mozilla is acutely aware of the problems that bedeviled P3P. Not having industry adoption is “a fail condition,” says Mozilla Labs’ Raskin. “Our thinking right now is that unlike P3P or the Creative Commons approach (when) you force everyone to use a template privacy policy…when you use a privacy icon, it’s only making a very small tangible scope-able claim about use of data.”

Another possible obstacle is inherent in self-rating: major Web sites like Google, Yahoo, and Facebook may decide that their own privacy policies drafted by their own lawyers are fine even though they trigger red warning icons in Firefox. Meanwhile, a phishing site run by the Russian mafia could falsely claim that it protects your privacy absolutely and be recommended in green by a Web browser. Or the privacy ratings may not be broad enough to capture reality.

Some of these issues can be glimpsed through PrivacyFinder.org, a P3P Web search tool created by Cranor’s group at Carnegie Mellon. It awards the U.S. Department of Homeland Security a perfect rating of four out of four green boxes. But Yahoo receives a horrible rating of one of four green boxes. (FDIC.gov is awarded three out of four; National Public Radio receives two of four.)

Mozilla still has yet to resolve “the question of whose privacy policies the icon is for,” said Berin Szoka, director of the center for Internet freedom at the Progress and Freedom Foundation. “Is it the publisher, or third-party ad networks on the page?”

“If you rely on the publisher to do this, they can only describe their own practices,” said Szoka, who attended last week’s meeting. “The problem is if you actually expect a publisher or first party to do that, you’re making them responsible for knowing, and liable for, whatever any third party such as a Web analytics firm, a cookie, or an ad network is doing. It’s not just as simple as describing what kind of data collection that’s going on with your page.”

Source:Declan McCullagh from CNET.com