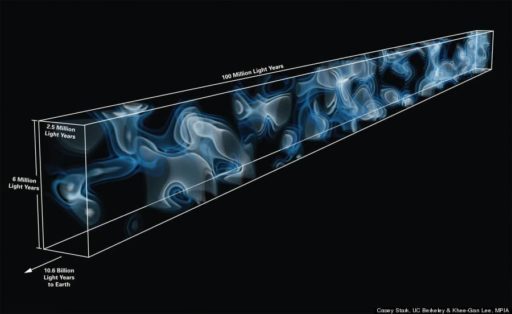

Day-by-day, robots are upgrading. In order to create future intelligent robots, future robots are going to capable of real-time autonomous navigation as they are going to have an internal updated three-dimensional map. Researchers at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have designed a system which is based on advanced algorithms and a low-cost interactive camera. The 3D map will help the robots to explore unknown environments easily as they move around.

MIT researchers have come up with a new approach, which is based on a technique dubbed Simultaneous Localization And Mapping (SLAM). This SLAM allows future robots to constantly update a map while learning new information over time. SLAM was tested on robots which were equipped with expensive laser-scanners. But now it demonstrates a robot’s function to locate itself by using a map with a low-cost camera.

Microsoft’s Kinect was a robot which had a perfect camera. But the fact is, this upgraded robot shape machine is not a robot, rather it is a Game Controller (GC). By using the same camera as used in Microsoft’s Kinect, these upgraded GC will be able to estimate the distance between themselves and nearby wall, while planning a route around any obstacles. Therefore, some important and enormous researches have been devoted to coding one-off navigational maps for robots and more important they are incapable of adjusting to changes in their surroundings over time.

CSAIL researcher Maurice Fallon said ’ “[For example], if you see objects that were not there previously, it is difficult for a robot to incorporate that into its map. There are also a lot of military applications, like mapping a bunker or cave network to enable a quick exit or re-entry when needed… Or a HazMat team could enter a biological or chemical weapons site and quickly map it on foot, while marking any hazardous spots or objects for handling by a remediation team coming later. These teams wear so much equipment that time is of the essence, making efficient mapping and navigation critical.”

The robot alike machine can travel all places specially on unexplored area. The Kinect sensor’s visible-light video camera and infrared depth sensor is able to scan the surroundings. By those, it builds up a 3D model of walls and objects in it. The system compares the features of the new image with the previous saved ones while passing through the same area now and then. The comparison comes with details. Then it matches the images with all the previous images that it has taken. At the same time, the system estimates its motion via on-board sensors. The system measures the distance of wheels rotation. The visual information with motion data determines the robot’s position. As the system combines two sources of information, it provides less errors. When the SLAM confirms the GC’s location, new features can be incorporated into the map by combining the old and new images taken by the robot earlier.

Recently, the team has tested the new system on a robotic wheelchair. In Willow Garage in Menlo Park, Califa, a PR2 robot is being developed in a portable sensor suite which will be worn by a human volunteer. PR2 robot is capable of locating itself within a 3D map of its surroundings while traveling at up to 1.5 meters per second. Certainly, the algorithm helps the robots to travel anywhere.

Source : TGDaily

[ttjad keyword=”electronic”]