Text based search is the most prominent search available on the internet these days. Most search engines, with the exception of a few specialized ones, require text input to be able to crawl their databases for hits on the topic.

Google demonstrated a new proto type of Google Goggles at the Mobile World Congress that can extract and translate the text content with in the Image.

The prototype uses Google’s machine translation technology and image recognition capabilities to create an additional layer of useful context. Assume a person takes a photo of a German magazine through his android device this application will translate the German content in the magazine to English (right now it only supports German-to-English translations).

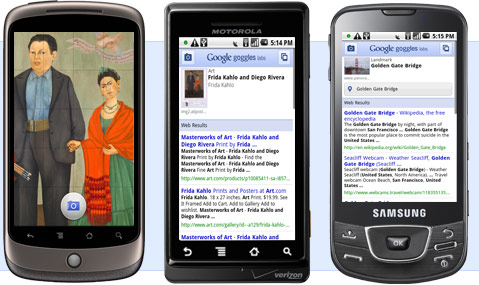

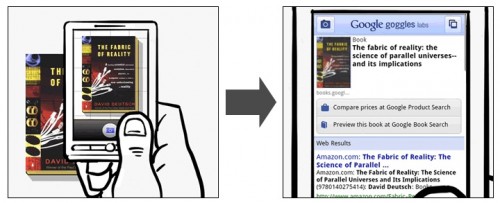

Other applications include identifying artwork, looking up items like books or wine and scanning contact information on business cards to find out more about the contact.

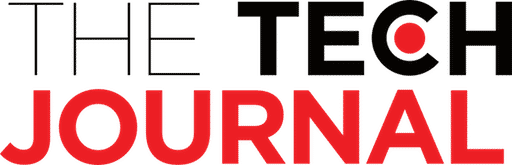

Google Goggles currently also supports photo-based searching for: books, DVDs, landmarks, logos, contact info, artwork, businesses, products, barcodes, and plain text.

Here’s how it works: When you capture an image, Google breaks it down into object-based signatures. It then compares those signatures against every item it can find in its image database. Within seconds, it returns the results to you, ordered by rank. Some results are returned before you even snap a photo, too, thanks to seamless integration of GPS and compass functionality.

Right now, Google Goggles doesn’t work well with food, cars, plants, or animals. But that’s going to change. Developers say the app will soon be able to recognize plants by their leaves, even suggest chess moves by “seeing” an image of your current board.

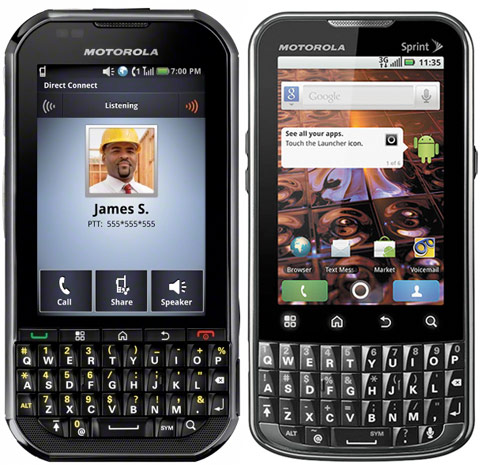

Google Goggles is now a lab product and only available on mobile phones running Android mobile system v1.6 or up. There is no word about when Google Goggles will be available on iPhone. But Google is working on it. Stay tuned and I’ll let you know when it’s released.

Its just great news. Way to Go Google.